Decoding Auto-GPT

There have been many interesting, complex, and innovative solutions since the release of ChatGPT. The community has explored countless possibilities for improving its capabilities.

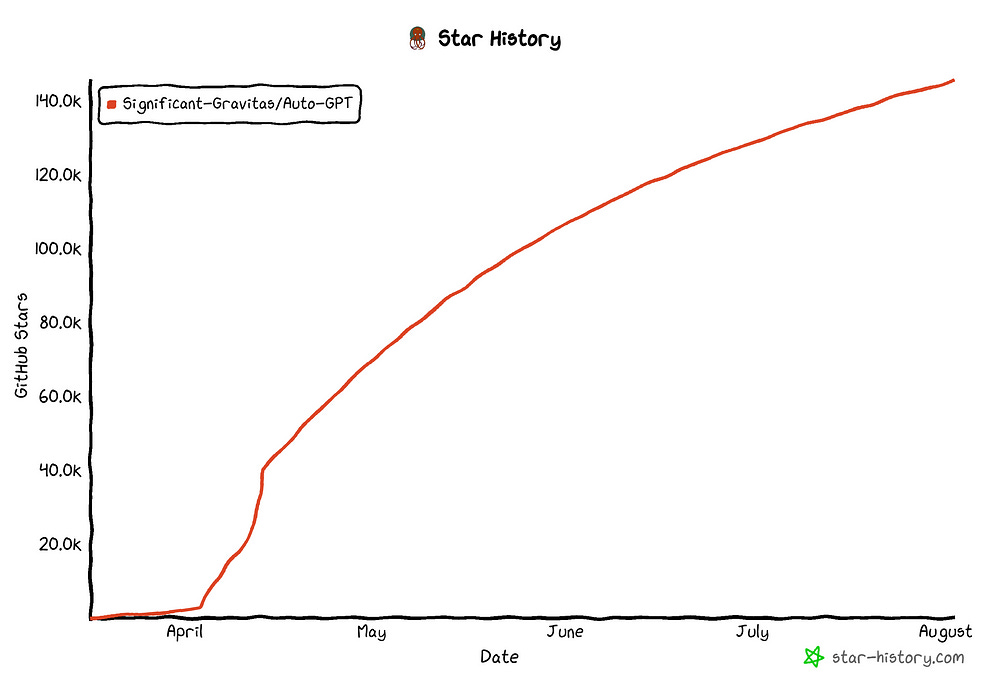

One of those is the well-known Auto-GPT package. With more than 140k stars, it is one of the highest-ranking repositories on Github!

Auto-GPT is an attempt at making GPT-4 fully autonomous.

Auto-GPT gives GPT-4 the power to make its own decisions

It sounds incredible and it definitely is! But how does it work?

In this post, we will go through Auto-GPT’s architecture and explore how it can reach autonomous behavior.

The Architecture

Auto-GPT has an overall architecture, or a main loop of sorts, that it uses to model autonomous behavior.

Let’s start by describing this overall after which we will go through each step in-depth:

The core of Auto-GPT is a cyclical sequence of steps:

-

Initialize the prompt with summarized information

-

GPT-4 proposes an action

-

The action is executed

-

Embed both the input and output of this cycle

-

Save embeddings to a vector database

These 5 steps make up the core of Auto-GPT and represent its main autonomous behavior.

Before we go through each step in-depth, there is a step before this cyclical sequence, namely initializing the agent.

0. Initializing the Agent

Before Auto-GPT completes a task fully autonomous, it first needs to initialize an Agent. This agent essentially describes who GPT-4 is and what goal it should pursue

Let’s say that we want Auto-GPT to create a recipe for vegan chocolate.

With that goal in mind, we need to give GPT-4 a bit of context about what an agent should be and what it should achieve:

We create a prompt defining two things:

-

Create 5 highly effective goals (these can be updated later on)

-

Create an appropriate role-based name (_GPT)

The name helps GPT-4 to continuously remember what it should model. The sub-goals are especially helpful to make small tasks for it to achieve.

Next, we give an example of what the desired output should be:

Giving examples to any generative Large Language Model works really well. By describing what the output should look like, it more easily generates accurate answers.

When we pass this prompt to GPT-4 using Auto-GPT, we get the following response:

It seems that GPT-4 has created a description of RecipeGPT for us. We can give this context to GPT-4 as a system prompt so that it continuously remembers its purpose.

Now that Auto-GPT has created a description of its agent, along with clear goals, it can start by taking its first autonomous action.

1. First Prompt

The very first step in its cyclical sequence is creating the prompt that triggers an action.

The prompt consists of three components:

-

System Prompt

-

Summary

-

Call to Action

We will go into the summary a bit later but the call to action is nothing more than asking GPT-4 which command it should use. The commands GPT-4 can use are defined in its System Prompt.

System Prompt

The system prompt is the context that we give to GPT-4 so that it remembers certain guidelines that it should follow.

As shown above, it consists of six guidelines:

-

The goals and description of the initialized Agent

-

Constrains it should adhere to

-

Commands it can use

-

Resources it has access to

-

Evaluation steps

-

Example of a valid JSON output

The last five steps are essentially constraints the Agent should adhere to.

Here is a more in-depth overview of what these guidelines and constraints generally look like:

As you can see, the system prompt sketches the boundaries in which GPT-4 can act. For example, in “Resources”, it describes that GPT-4 can use GPT-3.5 Agents for the delegation of simple tasks. Similarly, *“Evaluation,” *tells GPT-4 that it should continuously self-criticize its own behavior to improve upon its next actions.

Example of the First Prompt

Together, the very first prompt looks a bit like the following:

Notice that in blue “I was created” is mentioned. Typically, this would contain a summary of all the actions it has taken. Since it was just created, it has no action taken before and the summary is nothing more than “I was created”.

2. GPT-4 Proposes an Action

In step 2, we give GPT-4 the prompt we defined in the previous step. It can then propose an action to take which should adhere to the following format:

You can see six individual steps being mentioned:

-

Thoughts

-

Reasoning

-

Plan

-

Criticism

-

Speak

-

Action

These steps describe a format of prompting called Reason and ACT (ReACT).

ReACT is one of Auto-GPT’s superpowers!

ReACT allows for GPT-4 to mimic self-criticism and demonstrate more complex reasoning than what is possible if we just ask the model directly.

Whenever we ask GPT-4 a question using the ReACT framework, we ask GPT-4 to output individual thoughts, actions, and observations before coming to a conclusion.

By having the model mimic extensive reasoning, it tends to give more accurate answers compared to directly answering the question.

In our example, Auto-GPT has extended the base ReACT framework and generates the following response:

As you can see, it follows the ReACT pipeline that we described before but includes additional criticism and reasoning steps.

It proposes to search the web to extract more information about popular recipes.

3. Execute Action

After having generated a response, in valid JSON format. We can extract what the RecipeGPT wants to do. In this case, it calls for a web search:

and in turn, will execute searching the web:

This action it can take, searching the web, is simply a tool at its disposal that generates a file containing the main body of the page.

Since we explained to GPT-4 in its system prompt that it can use web search, it considers this a valid action.

Auto-GPT is as autonomous as the number of tools it possesses

Do note that if the only tool at its disposal is searching the web, then we can start to argue how autonomous such a model really is!

Either way, we save the output to a file for later use.

4. Embed Everything!

Every step Auto-GPT has taken thus far is vital information for any next steps to take. Especially when it needs to take dozens of steps, for example for taking over the world, remembering what it has done thus far is important.

One method of doing so is by embedding the prompts and output it has generated. This allows us to convert text into numerical representations (embeddings) that we can save later on.

These embeddings are generated using OpenAI’s *text-embedding-ada-002 *model which works tremendously well across many use cases.

5. Vector Database + Summarization

After having generated the embeddings, we need a place to store them. Pinecone is often used to create the vector database but many other systems can be used as long as you can easily find similar vectors.

The vector database allows us to quickly find information for an input query.

We can query the vector database to find all steps it has taken thus far. Using that information, we ask GPT-4 to create a **summary **of all actions it has taken thus far:

This summary is then used to construct the prompt as we did in step 1.

That way, it can “remember” what it has done thus far and think about the next steps to be taken.

This completes the very first cycle of Auto-GPT’s autonomous behavior!

6. Do it all over again!

As you might have guessed, the cycle continues from where we started, asking GPT-4 to take action based on a history of actions.

Auto-GPT will continue until it has reached its goal or when you interrupt it.

During this cyclical process, it can keep track of estimated costs in order to make sure you do not spend too much on your Agent.

In the future, especially with the release of Llama2, I expect and hope that local models can reliably be used in Auto-GPT!