Topic Modeling on Images? Why not?!

Topic Modeling is a collection of techniques that allows the user to find topics in large amounts of data, unsupervised. It can be highly advantageous when trying to model and perform EDA on the content of those documents.

A while ago I created a Topic Modeling technique called BERTopic which leverages BERT embeddings and a class-based TF-IDF to create dense clusters allowing for easily interpretable topics.

After a while though, I started thinking about its applications in other domains, such as computer vision. How cool would it be if we could apply Topic Modeling on images?

It took a while but after some experimentation, I came up with the solution, Concept 💡!

Concept is a package that introduces the concept of Topic Modeling on both images and text simultaneously. However, since topics typically refer to the written or spoken word, it does not fully encapsulate the meaning of grouped images. Instead, we refer to these grouped images and text as concepts.

Concept Modeling is the generalization of Topic Modeling to images and text

Thus, the Concept package performs Concept Modeling which is a type of statistical model for discovering abstract concepts that occur in a collection of images and corresponding documents.

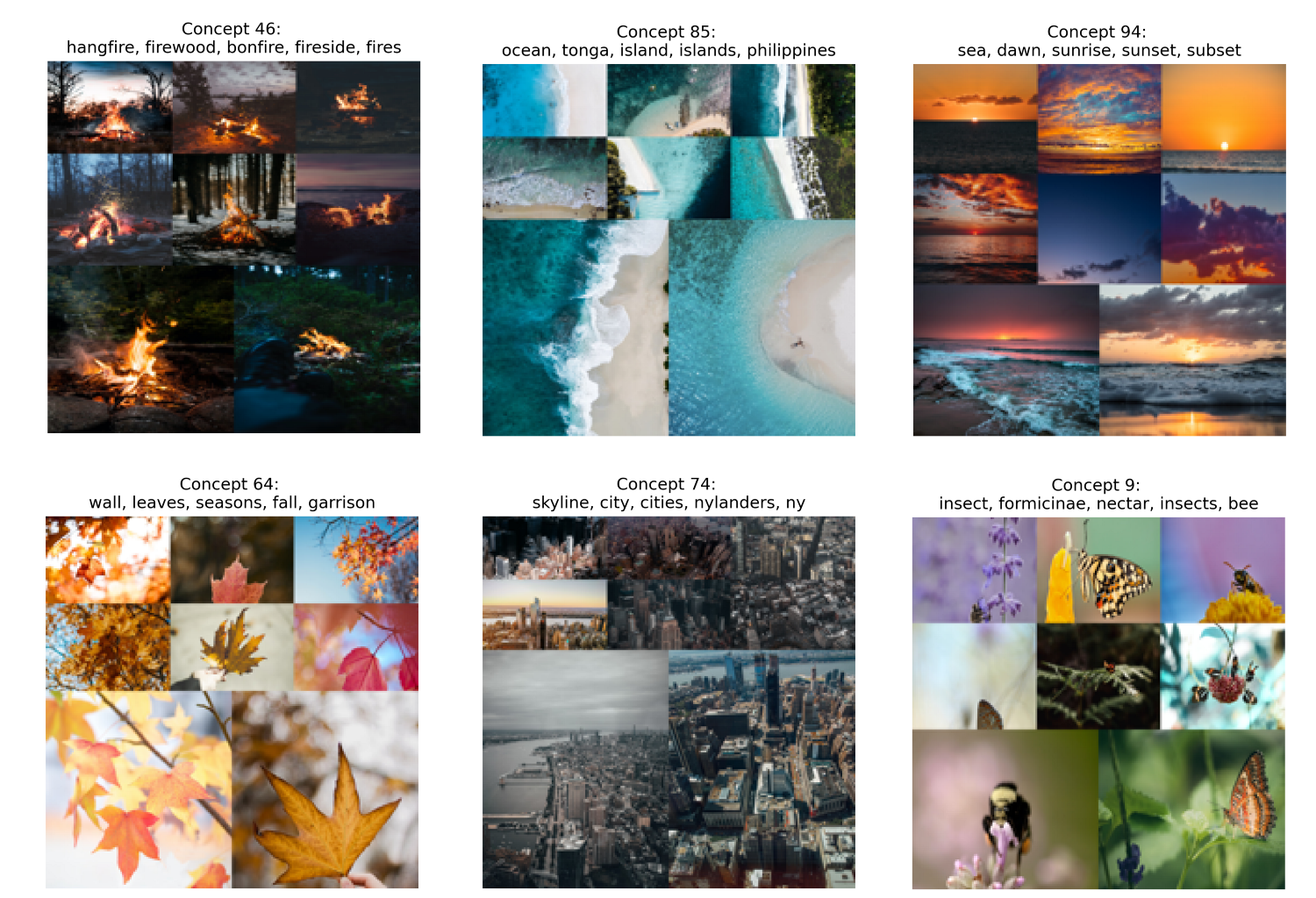

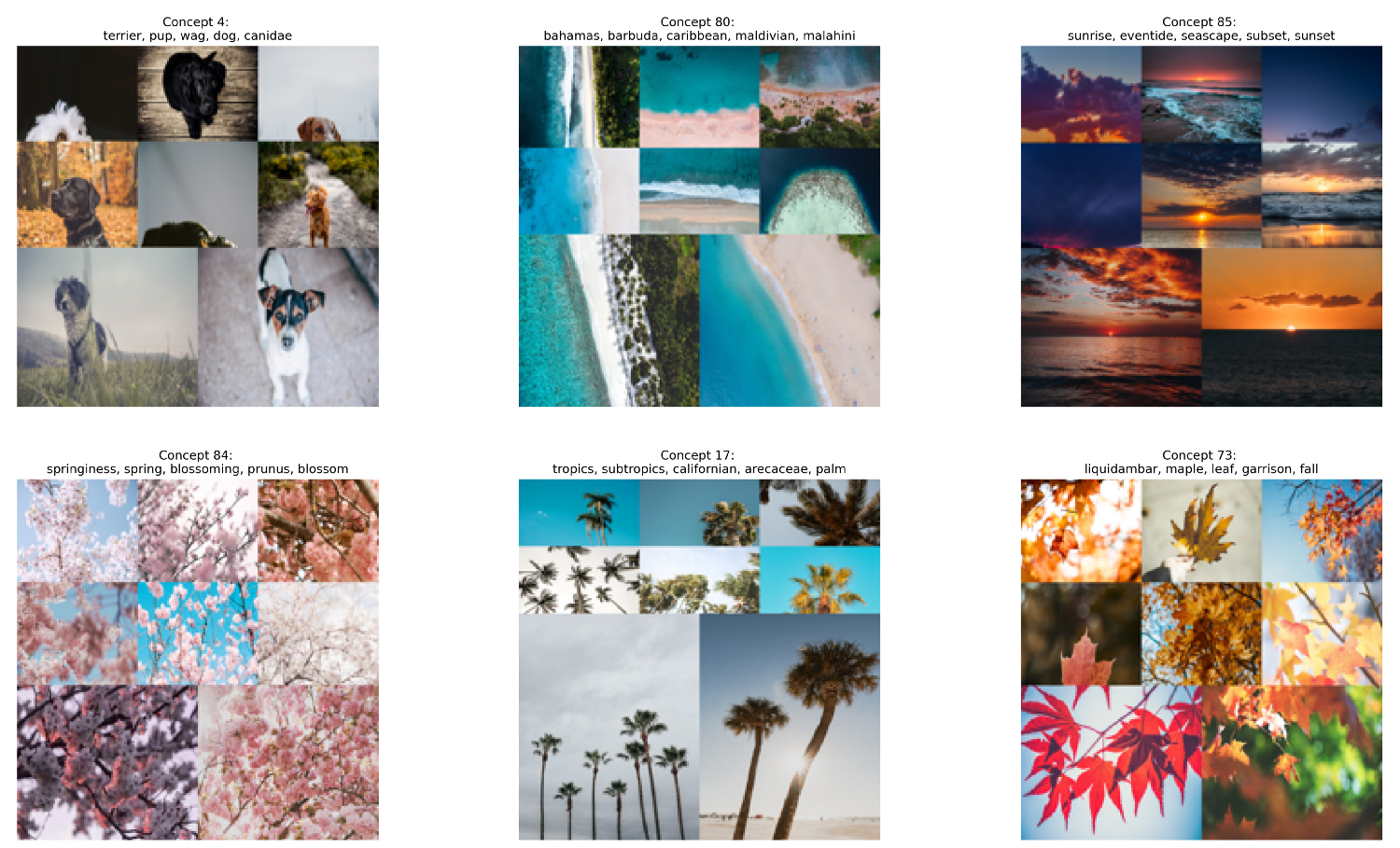

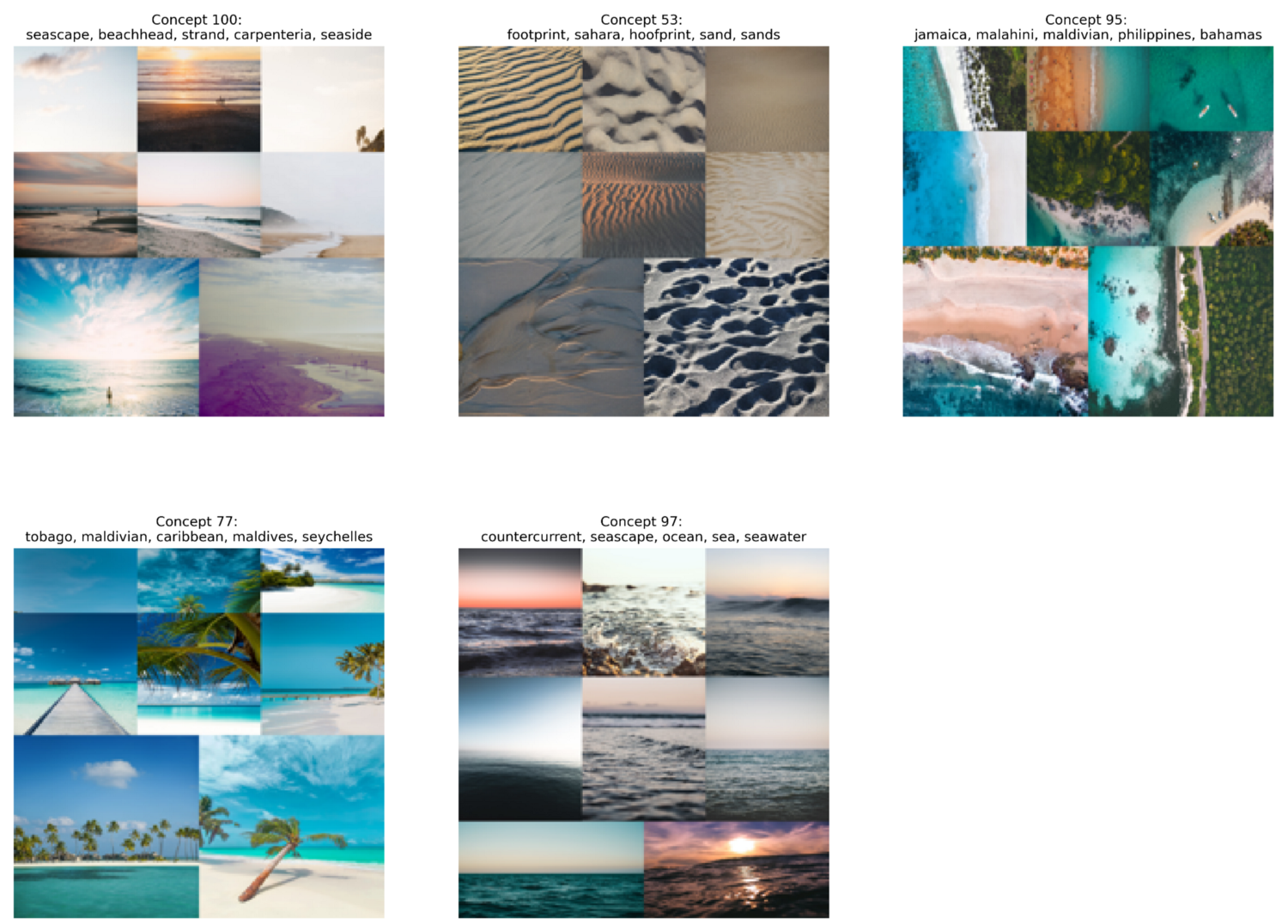

To give you an idea, the concepts below are retrieved through Concept Modeling:

As you might have noticed, we can interpret these concepts through their textual representation and through their visual representation. The true strength of Concept Modeling, however, can be found by combining these representations.

Concept Modeling allows for a multi-modal representation of concepts

An image tells you more than a thousand words. But what if we add words to the image? The synergy between these two communication methods can enrich the interpretation and understanding of a concept.

In this article, I will go through the steps of creating your own Concept Model using Concept. You can follow along with the Google Colab notebook here.

Step 1: Install Concept

We can install Concept easily through PyPI:

pip install concept

Step 2: Prepare Images

To perform Concept Modeling we will need a number of images to cluster. We will download 25.000 images from Unsplash as they were already neatly prepared by the Sentence-Transformers package.

import os

import glob

import zipfile

from tqdm import tqdm

from sentence_transformers import util

# Download 25k images from Unsplash

img_folder = 'photos/'

if not os.path.exists(img_folder) or len(os.listdir(img_folder)) == 0:

os.makedirs(img_folder, exist_ok=True)

photo_filename = 'unsplash-25k-photos.zip'

if not os.path.exists(photo_filename): #Download dataset if does not exist

util.http_get('http://sbert.net/datasets/'+photo_filename, photo_filename)

#Extract all images

with zipfile.ZipFile(photo_filename, 'r') as zf:

for member in tqdm(zf.infolist(), desc='Extracting'):

zf.extract(member, img_folder)

# Load image paths

img_names = list(glob.glob('photos/*.jpg'))

After having prepared the images, we can already use them in Concept without any text. However, no textual representations will be created. So the next step is to prepare our documents.

Step 3: Prepare Text

The interesting aspect of Concept is that any text can be fed to the model. Ideally, we want to feed it text that is most relevant to the images at hand. We typically have some understanding of what can be found in our images.

However, this might not always be the case. So for demonstration purposes, we are going to feed the model with a bunch of nouns in the English dictionary:

import random

import nltk

nltk.download("wordnet")

from nltk.corpus import wordnet as wn

all_nouns = [word for synset in wn.all_synsets('n') for word in synset.lemma_names()

if "_" not in word]

selected_nouns = random.sample(all_nouns, 50_000)

In the example above, we take 50.000 random nouns for two reasons. First, there is no need to get all nouns in the English dictionary as we can assume 50.000 should represent sufficient entities. Second, this speeds up computation a bit since we need to extract embeddings from a lower number of words.

In practice, if you have textual data that you know is relevant to the images, use those instead of the nouns!

Step 4: Train Model

The next step is training the model! As always, we kept this relatively straightforward. Simply feed the paths to each image and our selection of nouns to the model:

from concept import ConceptModel

concept_model = ConceptModel()

concepts = concept_model.fit_transform(img_names, docs=selected_nouns)

The concepts variable contains the predicted concept for each image.

The underlying model of Concept is Openai’s CLIP which is a neural network trained a large amount of image and text pairs. This means that the model benefits from using a GPU when generating the embeddings.

Lastly, run concept_model.frequency to see a dataframe containing the frequency of concepts.

NOTE: Use Concept(embedding_model=”clip-ViT-B-32-multilingual-v1”) to select a model that supports 50+ languages!

Pre-trained image embeddings

For those that want to try out this demo but do not have access to a GPU, we can load in the pre-trained image embeddings from the sentence-Transformers site:

import pickle

from sentence_transformers import util

# Load pre-trained image embeddings

emb_filename = 'unsplash-25k-photos-embeddings.pkl'

if not os.path.exists(emb_filename): #Download dataset if does not exist

util.http_get('http://sbert.net/datasets/'+emb_filename, emb_filename)

with open(emb_filename, 'rb') as fIn:

img_names, image_embeddings = pickle.load(fIn)

img_names = [f"photos/{path}" for path in img_names]

Then, we add the pre-trained embeddings to the model and train it:

from concept import ConceptModel

# Train Concept using the pre-trained image embeddings

concept_model = ConceptModel()

concepts = concept_model.fit_transform(img_names,

image_embeddings=image_embeddings,

docs=selected_nouns)

Step 5: Visualize Concepts

Now for the fun stuff, visualizing the concepts!

As mentioned before, the resulting concepts are multi-model, namely both visual and textual of nature. We need to find a way to represent both in a single overview.

To do this, we take a number of images that best represent each concept and find the nouns that in turn best represent these images.

In practice, creating the visualization is as straightforward as:

fig = concept_model.visualize_concepts()

Many of the pictures within our dataset are related to nature. However, if we look a bit further we can see more interesting concepts:

The results above give a nice example of how to intuitively think about concepts in Concept Modeling. Not only can we see the visual representation through a collection of images but the textual representation helps us further understand what can be found in these concepts.

Step 6: Search Concepts

We can quickly search for specific concepts by embedding a search term and finding the cluster embeddings that best represent them. As an example, let us search for the term beach and see what we can find. To do this, we simply run the following:

>>> search_results = concept_model.find_concepts("beach")

>>> search_results

[(100, 0.277577825349102),

(53, 0.27431058773894657),

(95, 0.25973751319723837),

(77, 0.2560122597417548),

(97, 0.25361988261846297)]

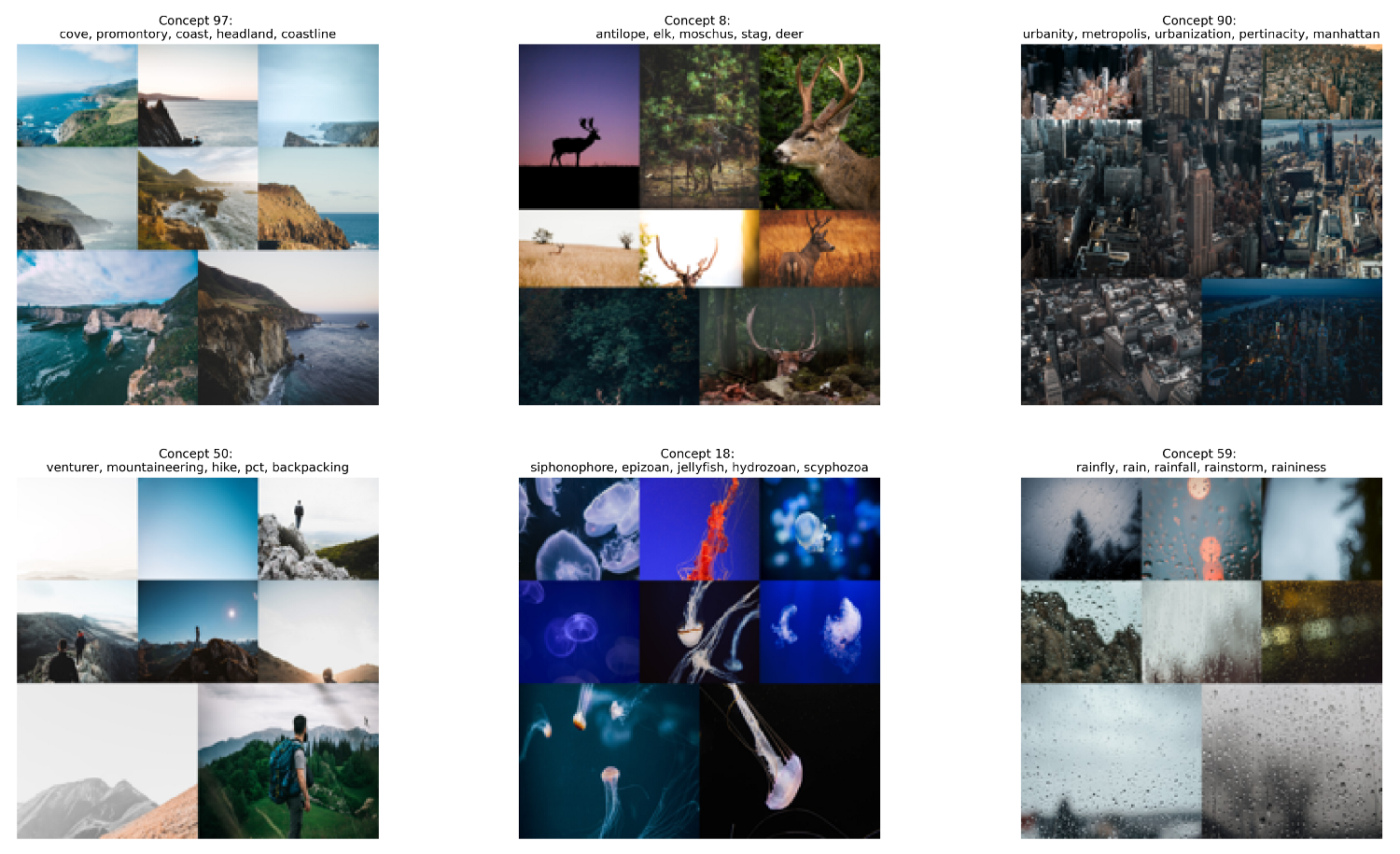

Each tuple contains two values, the first is the concept cluster, and the second is the similarity to the search term. The top 5 similar topics are returned.

Now, let us visualize those concepts to see how well the search function works:

fig = concept_model.visualize_concepts(concepts=[concept for concept, _ in search_results])

As we can see the resulting concepts are very similar to our search term! This multi-modal nature of the model allows us to easily search for concepts and images.

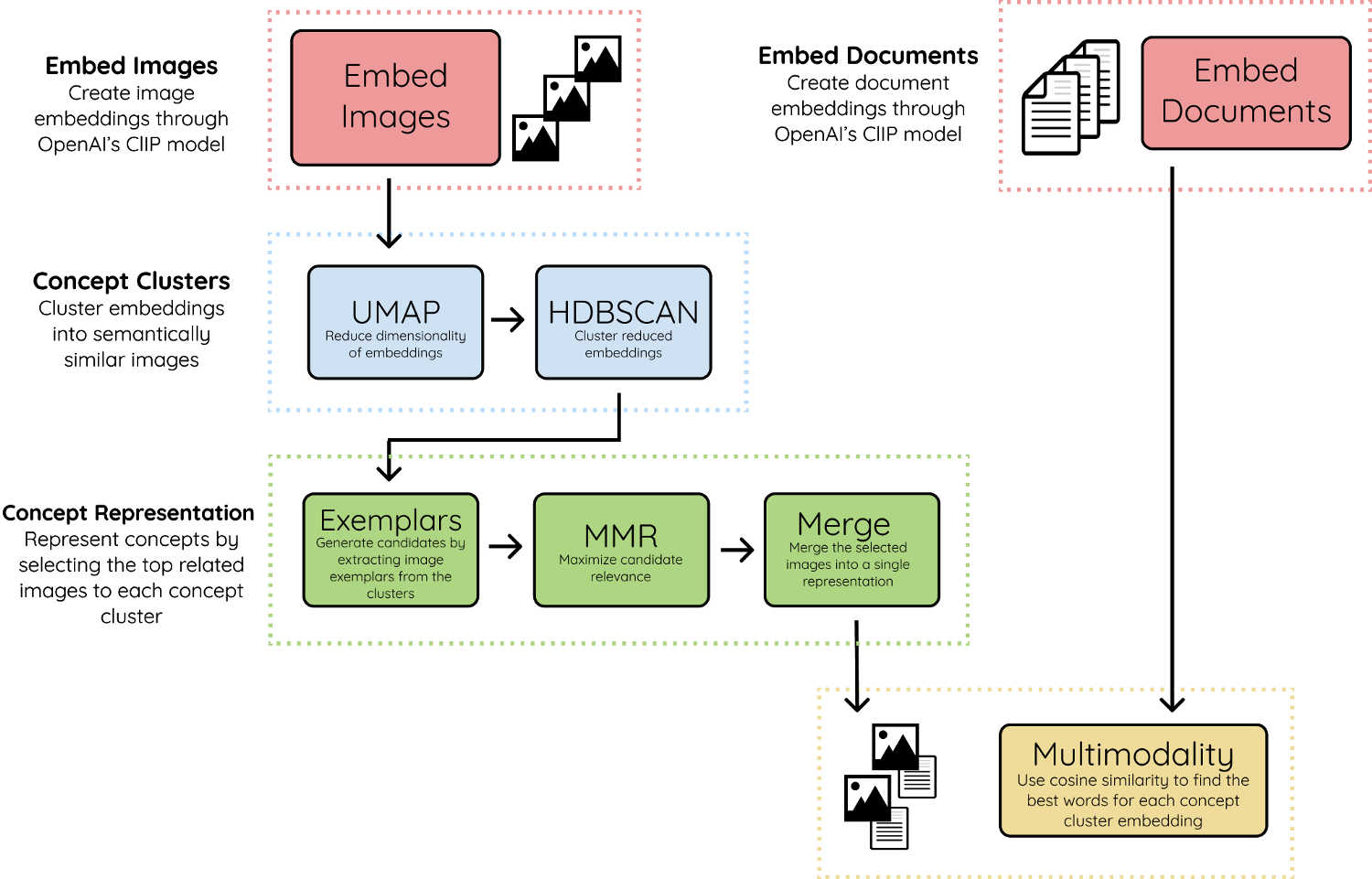

Step 7: Algorithmic Overview

For those that are interested in the underpinnings of Concept, below is an abstract overview of the method used to create the resulting concepts:

1. Embed Images & Documents

We start by embedding both images and documents into the same vector space using OpenAI’s CLIP model. This allows us to make comparisons between images and text. The documents can be words, phrases, sentences, etc. Whatever you feel best represents the concept clusters.

2. Concept Clusters

Using UMAP + HDBSCAN we cluster the image embeddings to create clusters that are visually and semantically similar to each other. We refer to the clusters as concepts as they represent a multimodal nature.

3. Concept Representation

To visually represent the concept clusters, we take the most related images to each concept, called exemplars. Depending on the concept cluster size, the number of exemplars for each cluster may exceed the hundreds so a filter is needed.

We use MMR to select images that are most related to the concept embedding but still sufficiently dissimilar to each other. This way, we can show as much of the concept as possible. The selected images are then combined into a single image to create a single visual representation.

4. Multimodality

Lastly, we take the textual embeddings and compare them with the created concept cluster embeddings. Using cosine similarity, we select the embeddings that are most related to one another. This introduces multimodality into the concept representation.

Thank you for reading!

If you are, like me, passionate about AI, Data Science, or Psychology, please feel free to add me on LinkedIn or follow me on Twitter.