Introducing KeyLLM - Keyword Extraction with LLMs

Large Language Models (LLMs) are becoming smaller, faster, and more efficient. Up to the point where I started to consider them for iterative tasks, like keyword extraction.

Having created KeyBERT, I felt that it was time to extend the package to also include LLMs. They are quite powerful and I wanted to prepare the package for when these models can be run on smaller GPUs.

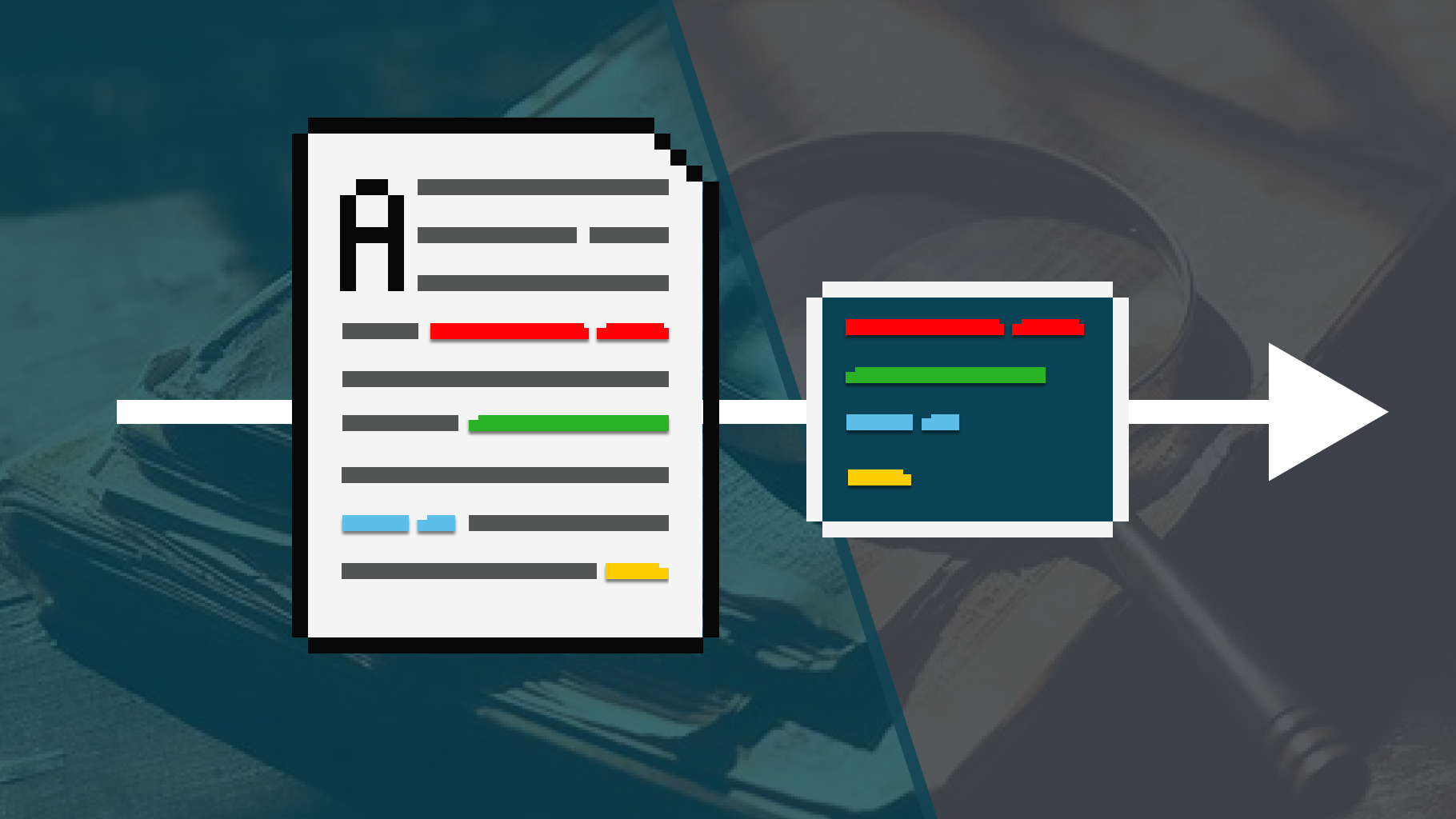

As such, introducing KeyLLM, an extension to KeyBERT that allows you to use any LLM to extract, create, or even fine-tune the keywords! In this tutorial, we will go through keyword extraction with KeyLLM using the recently released Mistral 7B model.

Update: I uploaded a video version to YouTube that goes more in-depth into how to use KeyLLM

We will start by installing a number of packages that we are going to use throughout this example:

pip install --upgrade git+https://github.com/UKPLab/sentence-transformers

pip install keybert ctransformers[cuda]

pip install --upgrade git+https://github.com/huggingface/transformers

We are installing sentence-transformers from its main branch since it has a fix for community detection which we will use in the last few use cases. We do the same for transformers since it does not yet support the Mistral architecture.

Loading the Model

Loading the Model

In previous tutorials, we demonstrated how we could quantize the original model's weight to make it run without running into memory problems.

Over the course of the last few months, TheBloke has been working hard on doing the quantization for hundreds of models for us.

This way, we can download the model directly which will speed things up quite a bit.

We'll start with loading the model itself. We will ofload 50 layers to the GPU. This will reduce RAM usage and use VRAM instead. If you are running into memory errors, reducing this parameter (gpu_layers) might help!

from ctransformers import AutoModelForCausalLM

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

model = AutoModelForCausalLM.from_pretrained(

"TheBloke/Mistral-7B-Instruct-v0.1-GGUF",

model_file="mistral-7b-instruct-v0.1.Q4_K_M.gguf",

model_type="mistral",

gpu_layers=50,

hf=True

)

After having loaded the model itself, we want to create a 🤗 Transformers pipeline.

The main benefit of doing so is that these pipelines are found in many tutorials and are often used in packages as backend. Thus far, ctransformers is not yet natively supported as much as transformers.

Loading the Mistral tokenizer with ctransformers is not yet possible as the model is quite new. Instead, we use the tokenizer from the original repository instead.

from transformers import AutoTokenizer, pipeline

# Tokenizer

tokenizer = AutoTokenizer.from_pretrained("mistralai/Mistral-7B-Instruct-v0.1")

# Pipeline

generator = pipeline(

model=model, tokenizer=tokenizer,

task='text-generation',

max_new_tokens=50,

repetition_penalty=1.1

)

📄 Prompt Engineering

Let's see if this works with a very basic example:

>>> response = generator("What is 1+1?")

>>> print(response[0]["generated_text"])

"""

What is 1+1?

A: 2

"""

Perfect! It can handle a very basic question. For the purpose of keyword extraction, let's explore whether it can handle a bit more complexity.

prompt = """

I have the following document:

* The website mentions that it only takes a couple of days to deliver but I still have not received mine

Extract 5 keywords from that document.

"""

response = generator(prompt)

print(response[0]["generated_text"])

We get the following output:

"""

I have the following document:

* The website mentions that it only takes a couple of days to deliver but I still have not received mine

Extract 5 keywords from that document.

**Answer:**

1. Website

2. Mentions

3. Deliver

4. Couple

5. Days

"""

It does great! However, if we want the structure of the output to stay consistent regardless of the input text we will have to give the LLM an example.

This is where more advanced prompt engineering comes in. As with most Large Language Models, Mistral 7B expects a certain prompt format. This is tremendously helpful when we want to show it what a "correct" interaction looks like.

The prompt template is as follows:

Based on that template, let's create a template for keyword extraction.

It needs to have two components:

* Example prompt - This will be used to show the LLM what a "good" output looks like

* Keyword prompt - This will be used to ask the LLM to extract the keywords

The first component, the example_prompt, will simply be an example of correctly extracting the keywords in the format that we are interested.

The format is a key component since it will make sure that the LLM will always output keywords the way we want:

example_prompt = """

<s>[INST]

I have the following document:

- The website mentions that it only takes a couple of days to deliver but I still have not received mine.

Please give me the keywords that are present in this document and separate them with commas.

Make sure you to only return the keywords and say nothing else. For example, don't say:

"Here are the keywords present in the document"

[/INST] meat, beef, eat, eating, emissions, steak, food, health, processed, chicken</s>"""

The second component, the keyword_prompt, will essentially be a repeat of the example_prompt but with two changes:

* It will not have an output yet. That will be generated by the LLM.

* We use of KeyBERT's [DOCUMENT] tag for indicating where the input document will go.

We can use the [DOCUMENT] to insert a document at a location of your choice. Having this option helps us to change the structure of the prompt if needed without being set on having the prompt at a specific location.

keyword_prompt = """

[INST]

I have the following document:

- [DOCUMENT]

Please give me the keywords that are present in this document and separate them with commas.

Make sure you to only return the keywords and say nothing else. For example, don't say:

"Here are the keywords present in the document"

[/INST]

"""

Lastly, we combine the two prompts to create our final template:

>>> prompt = example_prompt + keyword_prompt

>>> print(prompt)

"""

<s>[INST]

I have the following document:

- The website mentions that it only takes a couple of days to deliver but I still have not received mine.

Please give me the keywords that are present in this document and separate them with commas.

Make sure you to only return the keywords and say nothing else. For example, don't say:

"Here are the keywords present in the document"

[/INST] meat, beef, eat, eating, emissions, steak, food, health, processed, chicken</s>

[INST]

I have the following document:

- [DOCUMENT]

Please give me the keywords that are present in this document and separate them with commas.

Make sure you to only return the keywords and say nothing else. For example, don't say:

"Here are the keywords present in the document"

[/INST]

"""

Now that we have our final prompt template, we can start exploring a couple of interesting new features in KeyBERT with KeyLLM. We will start by exploring KeyLLM only using Mistral's 7B model

🗝️ Keyword Extraction with KeyLLM

Keyword extraction with vanilla KeyLLM couldn't be more straightforward; we simply ask it to extract keywords from a document.

This idea of extracting keywords from documents through an LLM is straightforward and allows for easily testing your LLM and its capabilities.

Using KeyLLM is straightforward, we start by loading our LLM through keybert.llm.TextGeneration and give it the prompt template that we created before.

🔥 TIP 🔥: If you want to use a different LLM, like ChatGPT, you can find a full overview of implemented algorithms here:

from keybert.llm import TextGeneration

from keybert import KeyLLM

# Load it in KeyLLM

llm = TextGeneration(generator, prompt=prompt)

kw_model = KeyLLM(llm)

After preparing our KeyLLM instance, it is as simple as running .extract_keywords over your documents:

documents = [

"The website mentions that it only takes a couple of days to deliver but I still have not received mine.",

"I received my package!",

"Whereas the most powerful LLMs have generally been accessible only through limited APIs (if at all), Meta released LLaMA's model weights to the research community under a noncommercial license."

]

keywords = kw_model.extract_keywords(documents)

We get the following keywords:

[['deliver',

'days',

'website',

'mention',

'couple',

'still',

'receive',

'mine'],

['package', 'received'],

['LLM',

'API',

'accessibility',

'release',

'license',

'research',

'community',

'model',

'weights',

'Meta']]

These seem like a great set of keywords!

You can play around with the prompt to specify the kind of keywords you want extracted, how long they can be, and even in which language they should be returned if your LLM is multi-lingual.

🚀 Efficient Keyword Extraction with KeyLLM

Iterating your LLM over thousands of documents is not the most efficient approach! Instead, we can leverage embedding models to make the keyword extraction a bit more efficient.

This works as follows. First, we embed all of our documents and convert them to numerical representations. Second, we find out which documents are most similar to one another. We assume that documents that are highly similar will have the same keywords, so there would be no need to extract keywords for all documents. Third, we only extract keywords from 1 document in each cluster and assign the keywords to all documents in the same cluster.

This is much more efficient and also quite flexible. The clusters are generated purely based on the similarity between documents, without taking cluster structures into account. In other words, it is essentially finding near-duplicate documents that we expect to have the same set of keywords.

To do this with KeyLLM, we embed our documents beforehand and pass them to .extract_keywords. The threshold indicates how similar documents will minimally need to be in order to be assigned to the same cluster.

Increasing this value to something like .95 will identify near-identical documents whereas setting it to something like .5 will identify documents about the same topic.

from keybert import KeyLLM

from sentence_transformers import SentenceTransformer

# Extract embeddings

model = SentenceTransformer('BAAI/bge-small-en-v1.5')

embeddings = model.encode(documents, convert_to_tensor=True)

# Load it in KeyLLM

kw_model = KeyLLM(llm)

# Extract keywords

keywords = kw_model.extract_keywords(documents, embeddings=embeddings, threshold=.5)

We get the following keywords:

>>> keywords

[['deliver',

'days',

'website',

'mention',

'couple',

'still',

'receive',

'mine'],

['deliver',

'days',

'website',

'mention',

'couple',

'still',

'receive',

'mine'],

['LLaMA',

'model',

'weights',

'release',

'noncommercial',

'license',

'research',

'community',

'powerful',

'LLMs',

'APIs']]

In this example, we can see that the first two documents were clustered together and received the same keywords. Instead of passing all three documents to the LLM, we only pass two documents. This can speed things up significantly if you have thousands of documents.

🏆 Efficient Keyword Extraction with KeyBERT & KeyLLM

Before, we manually passed the embeddings to KeyLLM to essentially do a zero-shot extraction of keywords. We can further extend this example by leveraging KeyBERT.

Since KeyBERT generates keywords and embeds the documents, we can leverage that to not only simplify the pipeline but suggest a number of keywords to the LLM.

These suggested keywords can help the LLM decide on the keywords to use. Moreover, it allows for everything within KeyBERT to be used with KeyLLM!

This efficient keyword extraction with both KeyBERT and KeyLLM only requires three lines of code! We create a KeyBERT model and assign it the LLM with the embedding model we previously created:

from keybert import KeyLLM, KeyBERT

# Load it in KeyLLM

kw_model = KeyBERT(llm=llm, model='BAAI/bge-small-en-v1.5')

# Extract keywords

keywords = kw_model.extract_keywords(documents, threshold=0.5)

We get the following keywords:

>>> keywords

[['deliver',

'days',

'website',

'mention',

'couple',

'still',

'receive',

'mine'],

['package', 'received'],

['LLM',

'API',

'accessibility',

'release',

'license',

'research',

'community',

'model',

'weights',

'Meta']]

And that is it! With KeyLLM you are able to use Large Language Models to help create better keywords. We can choose to extract keywords from the text itself or ask the LLM to come up with keywords.

By combining KeyLLM with KeyBERT, we increase its potential by doing some computation and suggestions beforehand.

🔥 TIP 🔥: You can use [CANDIDATES] to pass the generated keywords in KeyBERT to the LLM as candidate keywords. That way, you can tell the LLM that KeyBERT has already generated a number of keywords and ask it to improve them.

Thank you for reading!

If you are, like me, passionate about AI and/or Psychology, please feel free to add me on LinkedIn, follow me on Twitter, or subscribe to my Newsletter: