Unit Testing for Data Scientists

As Data Science becomes more of a staple in large organizations, the need for proper testing of code slowly becomes more integrated into the skillset of a Data Scientist.

Imagine you have been creating a pipeline for predicting customer churn in your organization. A few months after deploying your solution, there are new variables that might improve its performance.

Unfortunately, after adding those variables, the code suddenly stops working! You are not familiar with the error message and you are having trouble finding your mistake.

This is where testing, and specifically unit testing, comes in!

Writing tests for specific modules improves the stability of your code and makes mistakes easier to spot. Especially when working on large projects, having proper tests is essentially a basic need.

No data solution is complete without some form of testing

This article will focus on a small, but very important and arguably the foundation of testing, namely unit tests. Below, I will discuss in detail why testing is necessary, what unit tests are, and how to integrate them into your Data Science projects.

1. Why would you test your code?

Although this seems like a no-brainer, there are actually many reasons for testing your code:

-

Prevent unexpected output

-

Simplifies updating code

-

Increases overall efficiency of developing code

-

Helps to detect edge cases

-

And most importantly prevents you from pushing any broken code into production!

And that was only from the top of my head!

Even for those who do write production code, I would advise them to write tests for at least the most important modules of their code.

What if you run a deep learning pipeline and fails after 3 hours on something you could have easily tested?

NOTE: I can definitely imagine not wanting to write dedicated tests for one-off analyses that only took you 2 days to write. That is okay! It is up to you to decide when tests seem helpful. The most important thing to realize is that they can save you a lot of work.

2. Unit Testing

Unit testing is a method of software testing that checks which specific individual units of code are fit to be used. For example, if you would want to test the sum function in python, you could write the following test:

assert sum([1, 2, 3]) == 6

We know that 1+2+3=6, so it should pass without any problems.

We can extend this example by creating a custom sum function and testing it for tuples and lists:

def new_sum(iterable):

result = 0

for val in iterable:

result += val

return result

def test_new_sum_list():

assert new_sum([1, 2, 3]) == 6

def test_new_sum_tuple():

assert new_sum((-1, 2, 3)) == 6

if __name__ == "__main__":

test_new_sum_list()

test_new_sum_tuple()

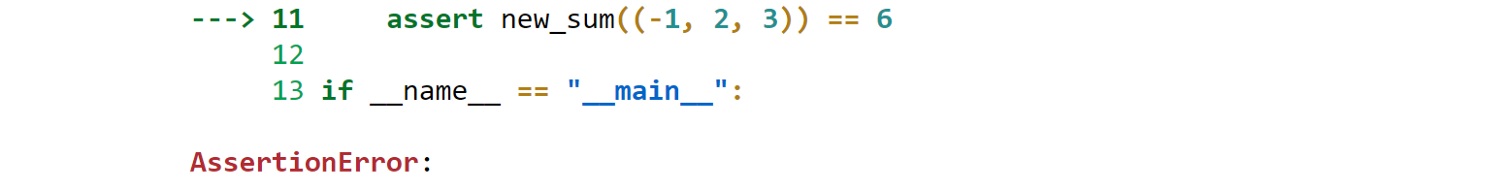

The output will be an assertion error for test_new_sum_tuple as -1+2+3 does not equal 6.

Unit testing helps you test pieces of code under many different circumstances. However, there is one important thing to remember:

Unit tests are not perfect and it is near impossible to achieve 100% code coverage

Unit tests are great for catching bugs, but will not capture everything as tests are prone to the same logical errors as the code you are trying to test for.

Ideally, you would want to include integration tests, code reviews, formal verification, etc. but that is beyond the scope of this article.

3. Pytest

The issue with the example above is that it will stop running the first time it faces an AssertionError. Ideally, we want to see an overview of all tests that pass or fail.

This is where test runners, such as Pytest, come in. Pytest is a great tool for creating extensive diagnoses based on the tests that you have defined.

We start by installing Pytest:

pip install pytest

After doing so, create a test_new_sum.py file and fill it with the following code:

def new_sum(iterable):

result = 0

for val in iterable:

result += val

return result

def test_new_sum_list():

assert new_sum([1, 2, 3]) == 6

def test_new_sum_tuple():

assert new_sum((-1, 2, 3)) == 6

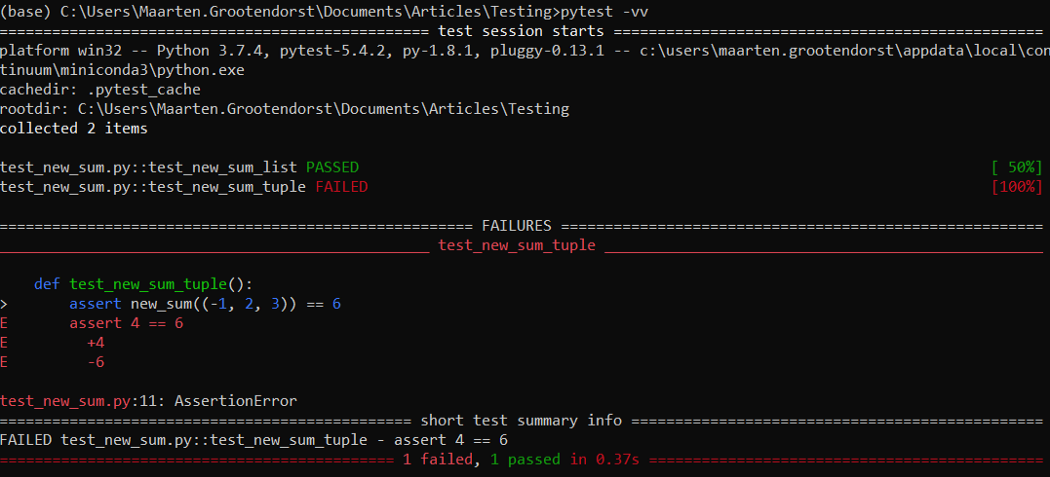

Finally, cd into the folder where test_new_sum.py is stored and simply run pytest -v. The result should look something like this:

What you can see in the image above is that it shows which tests passed and which failed.

The amazing thing is that Pytest shows you what values were expected and where it had failed. This allows you to quickly see what is going wrong!

4. Unit Testing for Data Scientists

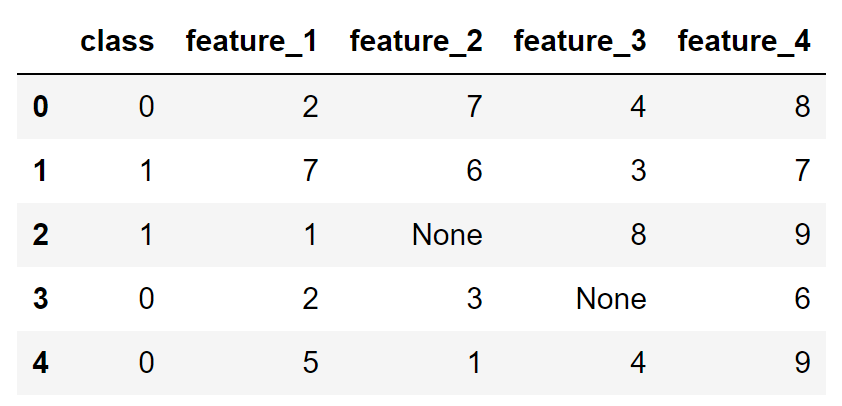

To understand how we could use Pytest for Data Science solutions, I will go through several examples. The following data is used for the examples:

In this data, we have a target class and several features that could be used as predictors.

Basic Usage

Let us start with a few simple preprocessing functions. We want to know the average value of each feature per class. To do that, we created the following basic function, aggregate_mean:

def aggregate_mean(df, column):

return df.groupby("class")[column].mean().to_dict()

Great! We throw in a data frame and column, and it should spit out a dictionary with the average per class.

To test this, we write the following test_aggregate_mean.py file:

import pandas as pd

def load_data():

data = pd.DataFrame([[0, 2, 7, 4, 8],

[1, 7, 6, 3, 7],

[1, 1, "None", 8, 9],

[0, 2, 3, "None", 6],

[0, 5, 1, 4, 9]],

columns = [f"feature_{i}" if i!=0

else "class" for i in range(5)])

return data

def aggregate_mean(df, column):

return df.groupby("class")[column].mean().to_dict()

def test_aggregate_mean_feature_1():

data = load_data()

expected = {0: 3, 1: 4}

result = aggregate_mean(data, "feature_1")

assert expected == result

When we run pytest -v it should give no errors!

Parametrize

Run multiple test cases with Parametrize

If we want to test for many different scenarios it would cumbersome to create many duplicates of a test. To prevent that, we can use Pytest’s parametrize function.

Parameterize extends a test function by allowing us to test for multiple scenarios. We simply add the parametrize decorator and state the different scenarios.

For example, if we want to test the aggregate_mean function for features 1 and 3, we adopt the code as follows:

@pytest.mark.parametrize("column, expected", [("feature_1", {0: 3, 1: 4}), ("feature_3", {0: 4, 1: 5.5})])

def test_aggregate_mean_feature_1(column, expected):

data = load_data()

result = aggregate_mean(data, column)

assert expected == result

After running pytest -v it seems that the result for feature 3 was not what we expected. As it turns out, the None value that we saw before is actually a string!

We might not have found this bug ourselves if we had not tested for it.

Fixtures

Prevent repeating code in your unit tests with Fixtures

When creating these test cases we often want to run some code before every test case. Instead of repeating the same code in every test, we create fixtures that establish a baseline code for our tests.

They are often used to initialize database connections, load data, or instantiate classes.

Using the previous example, we would like to turn load_data() into a fixture. We change its name to data() in order to better represent the fixture. Then, @pytest.fixture(scope='module') is added to the function as a decorator. Finally, we add the fixture as a parameter to the unit test:

import pandas as pd

import pytest

@pytest.fixture(scope='module')

def data():

df = pd.DataFrame([[0, 2, 7, 4, 8],

[1, 7, 6, 3, 7],

[1, 1, "None", 8, 9],

[0, 2, 3, "None", 6],

[0, 5, 1, 4, 9]],

columns = [f'feature_{i}' if i!=0

else 'class' for i in range(5)])

return df

def aggregate_mean(df, column):

return df.groupby("class")[column].mean().to_dict()

@pytest.mark.parametrize("column, expected", [("feature_1", {0: 3, 1: 4}), ("feature_3", {0: 3, 1: 4})])

def test_aggregate_mean_feature_1(data, column, expected):

assert expected == aggregate_mean(data, column)

As you can see, there is no need to set data() to a variable since it is automatically evoked and stored in the input parameter.

Fixtures are a great way to increase readability and reduce the chance of any errors in the test functions.

Mocking

Imitate code in your pipeline to speed up testing with Mocking

In many data-driven solutions, you will have large files to work with which can slow down your pipeline tremendously. Creating tests for these pieces of code is difficult as fast tests are preferred.

Imagine you load in a 2GB csv file with pd.read_csv and you would like to test if the output of a pipeline is correct. With unittest.mock.patch, we can replace the output of pd.read_csv with our own to test the pipeline with smaller data.

Let’s say you have created the following codebase for your pipeline:

import pandas as pd

def aggregate_mean(df, column):

return df.groupby("class")[column].mean().to_dict()

def pipeline(column):

data = pd.read_csv("SOME_VERY_LARGE_FILE.csv")

data = aggregate_mean(data, column)

return data

Now, to test whether this pipeline works as intended, we want to use a smaller dataset so we can more accurately test cases. To do so, we patch the pd.read_csv with the smaller dataset that we have been using thus far:

import pandas as pd

from unittest.mock import patch

from codebase import pipeline

@patch('codebase.pd.read_csv')

def test_aggregate_mean_feature_1(read_csv):

read_csv.return_value = pd.DataFrame([[0, 2, 7, 4, 8],

[1, 7, 6, 3, 7],

[1, 1, "None", 8, 9],

[0, 2, 3, "None", 6],

[0, 5, 1, 4, 9]],

columns = [f'feature_{i}' if i!=0

else 'class' for i in range(5)])

result = pipeline("feature_1")

assert result == {0: 3, 1: 4}

When we run pytest -v, the large .csv will not be loaded and instead replaced with our small dataset. The test runs quickly and we can easily test new cases!

Coverage

Have you covered most of your code with unit tests?

Unit testing is not a perfect method by any means, but knowing how much of your code you are testing is extremely helpful. Especially when you have complicated pipelines, it would be nice to know if your tests cover most of your code.

In order to check for that, I would advise you to install Pytest-cov, which is an extension to Pytest, and shows you how much of your code is covered by tests.

Simply install Pytest-cov as follows:

pip install pytest-cov

After doing so, run the following test:

pytest --cov=codebase

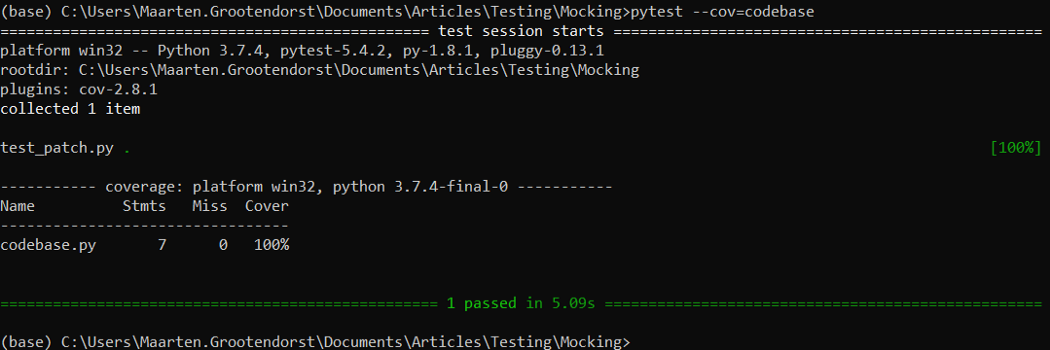

This means that we are running Pytest and check how much of the tests cover codebase.py. The result is the following output:

Fortunately, our test covers all code in codebase.py!

If there were any lines that we note covered, then that value would be shown under Miss.

All examples and code in this article can be found here.