Using Whisper and BERTopioc to model Kurzgesagt's videos

A little over a month ago, OpenAI released a neural net for English speech recognition called Whisper. It has gained quite some popularity over the last few weeks due to its accuracy, ease of use, and most importantly because they open-sourced it!

With these kinds of releases, I can hardly wait to get my hands on such a model and play around with it. However, I like to have a fun or interesting use case to actually use it for.

So I figured, why not use it for creating transcripts of a channel I always enjoy watching, Kurzgesagt!

It is an amazing channel with incredibly well-explained videos focused on animated educational content, ranging from topics about Climate Change and Dinosaurs to Black Holes and Geoengineering.

I decided to do a little more than just create some transcripts. Instead, let us use BERTopic to see if we can extract the main topics found in Kurzgesagt’s videos.

Hence, this article is a tutorial about using Whisper and BERTopic to extract transcripts from Youtube videos and use topic modeling on top of them.

1. Installation

Before going into the actual code, we first need to install a few packages, namely Whisper, BERTopic, and Pytube.

pip install --upgrade git+https://github.com/openai/whisper.git

pip install git+https://github.com/pytube/pytube.git@refs/pull/1409/merge

pip install bertopic

We are purposefully choosing a specific pull request in Pytube since it fixes an issue with empty channels.

At the very last step, I am briefly introducing an upcoming feature of BERTopic, which you can already install with:

pip install git+https://github.com/MaartenGr/BERTopic.git@refs/pull/840/merge

2. Pytube

We need to start off by extracting every metadata that we need from Kurzgesagt’s YouTube channel. Using Pytube, we can create a Channel object that allows us to extract the URLs and titles of their videos.

# Extract all video_urls

from pytube import YouTube, Channel

c = Channel('https://www.youtube.com/c/inanutshell/videos/')

video_urls = c.video_urls

video_titles = [video.title for video in c.videos]

We are also extracting the titles as they might come in handy when we are visualizing the topics later on.

3. Whisper

When we have our URLs, we can start downloading the videos and extracting the transcripts. To create those transcripts, we make use of the recently released Whisper.

The model can be quite daunting for new users but it is essentially a sequence-to-sequence Transformer model which has been trained on several different speech-processing tasks. These tasks are fed into the encoder-decoder structure of the Transformer model which allows Whisper to replace several stages of the traditional speech-processing pipeline.

In other words, because it focuses on jointly representing multiple tasks, it can learn a variety of different processing steps all in a single model!

This is great because we can now use a single model to do all of the processing necessary. Below, we will import our Whisper model:

# Just two lines of code to load in a Whisper model!

import whisper

whisper_model = whisper.load_model("tiny")

Then, we iterate over our YouTube URLs, download the audio, and finally pass them through our Whisper model in order to generate the transcriptions:

# Infer all texts

texts = []

for url in video_urls[:100]:

path = YouTube(url).streams.filter(only_audio=True)[0].download(filename="audio.mp4")

transcription = whisper_model.transcribe(path)

texts.append(transcription["text"])

And that is it! We now have transcriptions from 100 videos of Kurzgesagt.

NOTE: I opted for the tiny model due to its speed and accuracy but there are more accurate models that you can use in Whisper that are worth checking out.

4. Transcript processing

BERTopic approaches topic modeling as a clustering task and as a result, assigns a single document to a single topic. To circumvent this, we can split our transcripts into sentences and run BERTopic on those:

from nltk.tokenize import sent_tokenize

# Sentencize the transcripts and track their titles

docs = []

titles = []

for text, title in zip(texts, video_titles):

sentences = sent_tokenize(text)

docs.extend(sentences)

titles.extend([title] * len(sentences))

Not only do we then have more data to train on, but we can also create more accurate create topic representations.

NOTE: There might or might not be a feature for topic distributions coming up in BERTopic…

5. BERTopic

BERTopic is a topic modeling technique that focuses on modularity, transparency, and human evaluation. It is a framework that allows users to, within certain boundaries, build their own custom topic model.

BERTopic works by following a linear pipeline of clustering and topic extraction:

At each step of the pipeline, it makes few assumptions about all steps that came before that. For example, the c-TF-IDF representation does not care which input embeddings are used. This guiding philosophy of BERTopic allows for the sub-components to easily be swapped out. As a result, you can build your model however you like:

Although we can use BERTopic in just a few lines, it is worthwhile to generate our embeddings such that we can use them multiple times later on with the need to regenerate them:

from sentence_transformers import SentenceTransformer

# Create embeddings from the documents

sentence_model = SentenceTransformer("paraphrase-multilingual-mpnet-base-v2")

embeddings = sentence_model.encode(docs)

Although the content of Kurzgesagt is in English, there might be some non-English terms out there, so I opted for a multilingual sentence-transformer model.

After having generated our embeddings, I wanted to tweak the sub-models slightly in order to best fit with our data:

from bertopic import BERTopic

from umap import UMAP

from hdbscan import HDBSCAN

from sklearn.feature_extraction.text import CountVectorizer

# Define sub-models

vectorizer = CountVectorizer(stop_words="english")

umap_model = UMAP(n_neighbors=15, n_components=5, min_dist=0.0, metric='cosine', random_state=42)

hdbscan_model = HDBSCAN(min_cluster_size=20, min_samples=2, metric='euclidean', cluster_selection_method='eom')

# Train our topic model with BERTopic

topic_model = BERTopic(

embedding_model=sentence_model,

umap_model=umap_model,

hdbscan_model=hdbscan_model,

vectorizer_model=vectorizer

).fit(docs, embeddings)

Now that we have fitted our BERTopic model, let us take a look at some of its topics. To do so, we run topic_model.get_topic_info().head(10) to get a dataframe of the most frequent topics:

We can see topics about food, cells, the galaxy, and many more!

6. Visualize Topics

Although the model found some interesting topics it seems like a lot of work to go through them all by hand. Instead, we can use a number of visualization techniques that makes it a bit easier.

First, it might be worthwhile to generate some nicer-looking labels. To do so, we are going to generate our topic labels with generate_topic_labels.

We want the top 3 words, with a , separator and we are not so much interested in a topic prefix.

# Generate nicer looking labels and set them in our model

topic_labels = topic_model.generate_topic_labels(nr_words=3,

topic_prefix=False,

word_length=15,

separator=", ")

topic_model.set_topic_labels(topic_labels)

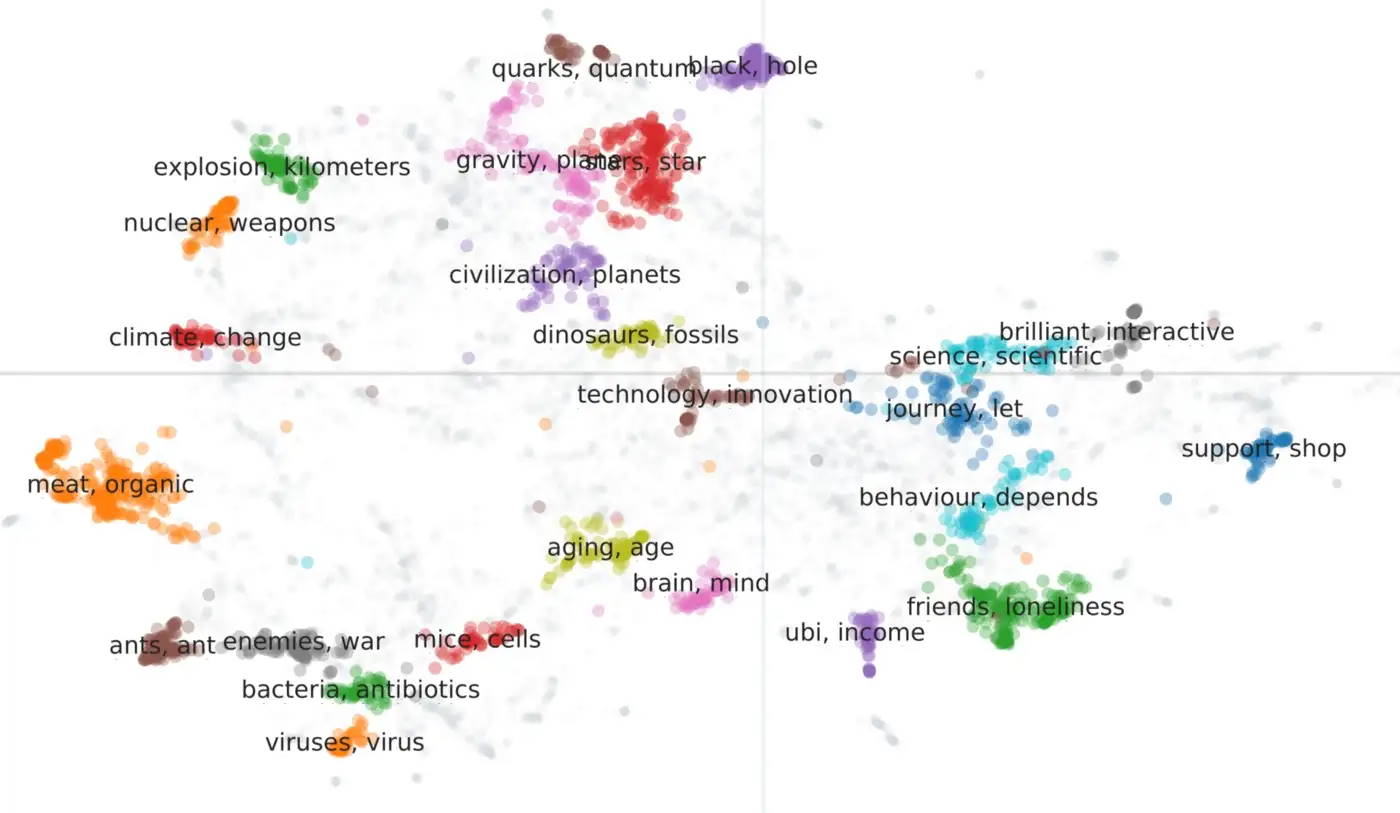

Now, we are ready to perform some interesting visualizations. First off, .visualize_documents ! This method aims to visualize the documents and their corresponding documents interactively in a 2D space:

# Manually selected some interesting topics to prevent information overload

topics_of_interest = [33, 1, 8, 9, 0, 30, 27, 19, 16,

28, 44, 11, 21, 23, 26, 2, 37, 34, 3, 4, 5,

15, 17, 22, 38]

# I added the title to the documents themselves for easier interactivity

adjusted_docs = ["<b>" + title + "</b><br>" + doc[:100] + "..."

for doc, title in zip(docs, titles)]

# Visualize documents

topic_model.visualize_documents(

adjusted_docs,

embeddings=embeddings,

hide_annotations=False,

topics=topics_of_interest,

custom_labels=True

)

As can be seen in the visualization above, we have a number of very different topics, ranging from dinosaurs and climate change to bacteria and even ants!

7. Topics per video

Since we have split each video up into sentences, we can model the distribution of topics per video. I saw recently saw a video called

”What Happens if a Supervolcano Blows Up?”

So let’s see which topics can be found in that video:

# Topic frequency in ""What Happens if a Supervolcano Blows Up?""

video_topics = [topic_model.custom_labels_[topic+1]

for topic, title in zip(topic_model.topics_, titles)

if title == "What Happens if a Supervolcano Blows Up?"

and topic != -1]

counts = pd.DataFrame({"Topic": video_topics}).value_counts(); countstopics_per_class = topic_model.topics_per_class(docs, classes=classes)

As expected, it seems to be mostly related to a topic about volcanic eruptions but also explosions in general.

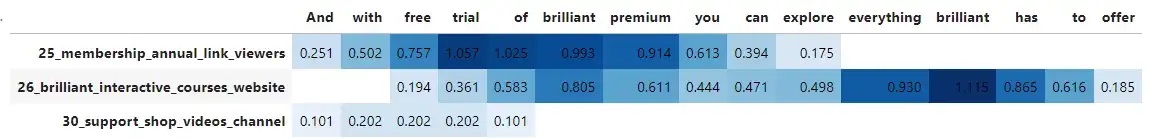

8. Topic Distribution

In the upcoming BERTopic v0.13 release, there is the possibility to approximate the topic distributions for any document regardless of its size.

The method works by creating a sliding window over the document and calculating the windows similarity to each topic:

We can generate these distributions for all of our documents by running the following and making sure that we calculate the distributions on a token level:

# We need to calculate the topic distributions on a token level

(topic_distr,

topic_token_distr) = topic_model.approximate_distribution(

docs, calculate_tokens=True

)

Now we need to choose a piece of text over which to model the topics. For that, I thought it would be interesting to explore how the model handles the advertisement of Brilliant at the end of Kurzgesagt’s:

And with free trial of brilliant premium you can explore everything brilliant has to offer.

We input that document and run our visualization:

# Create a visualization using a styled dataframe if Jinja2 is installed

df = topic_model.visualize_approximate_distribution(docs[100], topic_token_distr[100]); df

As we can see, it seems to pick up topics about Brilliant and memberships, which seems to make sense in this case.

Interestingly, with this approach, we can take into account that there are not only multiple topics per document but even multiple topics per token!