Transform your ML-model to Pytorch with Hummingbird

Over the last few years, the capabilities of Deep Learning have increased tremendously. With that, many standards of serving your Neural Network have found their way to the masses such as ONNX and TVM.

That popularity has led to a focus on optimizing Deep Learning pipelines, training, inference, and deployment by leveraging tensor computations.

In contrast, traditional Machine Learning models such as Random Forests are typically CPU-based on inference tasks and could benefit from GPU-basedhardware accelerators.

Transform your trained Machine Learning model to Pytorch with Hummingbird

Now, what if we could use the many advantages of Neural Networks in our traditional Random Forest? Even better, what if we could transform the Random Forest and leverage GPU-accelerated inference?

This is where Microsoft’s Hummingbird comes in! It transforms your Machine Learning model to tensor computations such that it can use GPU-acceleration to speed up inference.

In this article, I will not only describe how to use the package, but also the underlying theory from the corresponding papers and the roadmap.

NOTE: Hummingbird is only used to speed up the time to make a prediction, not to speed up the training!

1. Theory

Before delving into the package, it is important to understand why this transformation is desirable and this could be done.

Why transform your model to tensors?

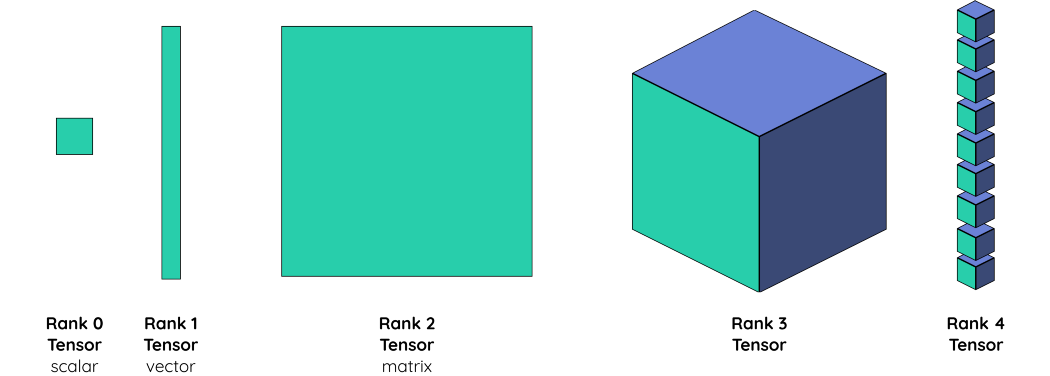

In part, a tensor is an N-dimensional array of data (see figure below). In the context of TensorFlow, it can be seen as a generalization of a Matrix that allows you to have multidimensional arrays. You can perform optimized mathematical operations on them without knowing what each dimension represent semantically. For example, like how matrix multiplication is used to manipulated several vectors simultaneously.

There are several reasons for transforming your model into tensors:

-

Tensors, as the backbone of Deep Learning, have been widely researched in the context of making Deep Learning accessible to the masses. Tensor operations needed to be optimized significantly in order to make Deep Learning useable on a larger scale. This speeds up inference tremendously, which cuts costs for making new predictions.

-

It allows for a more unified standard compared to using traditional Machine Learning models. By using tensors, we can transform our traditional model to ONNX and use the same standard across all your AI-solutions.

-

Any optimization in Neural Network frameworks is likely to result in the optimization of your traditional Machine Learning model.

Transforming Algorithmic models

Transformation to tensors is not a trivial task as there are two branches of models: Algebraic (e.g., linear models) and algorithm models (e.g., decision trees). This increases complexity when mapping a model to tensors.

Here, the mapping of algorithmic models is especially difficult. Tensor computation is known for performing bulk or symmetric operations. This is difficult to do for algorithmic models as they are inherently asymmetric.

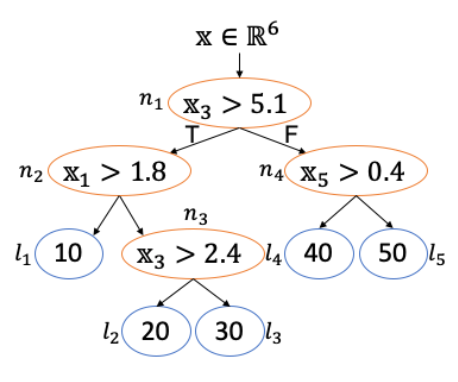

Let’s use the decision tree below as an example:

The decision tree is split into three parts:

-

Input feature vector

-

Four decision nodes (orange)

-

Five leaf nodes (blue)

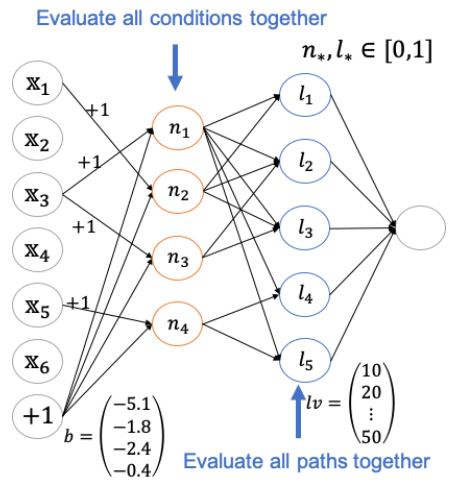

The resulting neural network’s first layer is that of the input feature vector which is connected to the four decision nodes (orange). Here, all conditions are evaluated together. Next, all leaf nodes are evaluated together by using matrix multiplication.

The resulting decision tree, as a neural network, can be seen below:

The resulting Neural Network introduces a degree of redundancy seeing as all conditions are evaluated. Whereas, normally one path is evaluated. This redundancy is, in part, offset by the Neural Network’s ability to vectorize computations.

NOTE: There are many more strategies for the transformation of models into tensors, which are described in their papers, here and here.

Speed-up

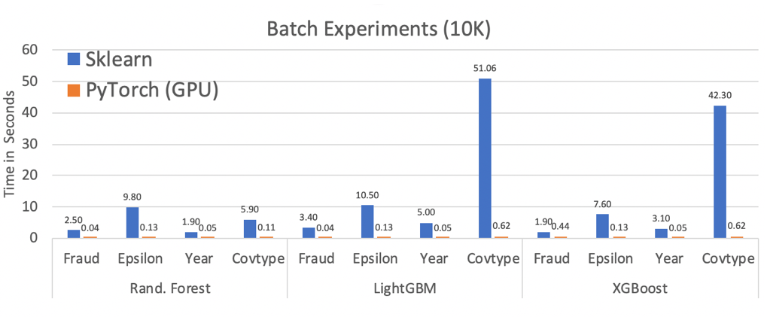

According to their papers, the usage of GPU-acceleration creates a massive speed-up in inference compared to traditional models.

The results above clearly indicate that it might be worthwhile to transform your Forest to a Neural Network.

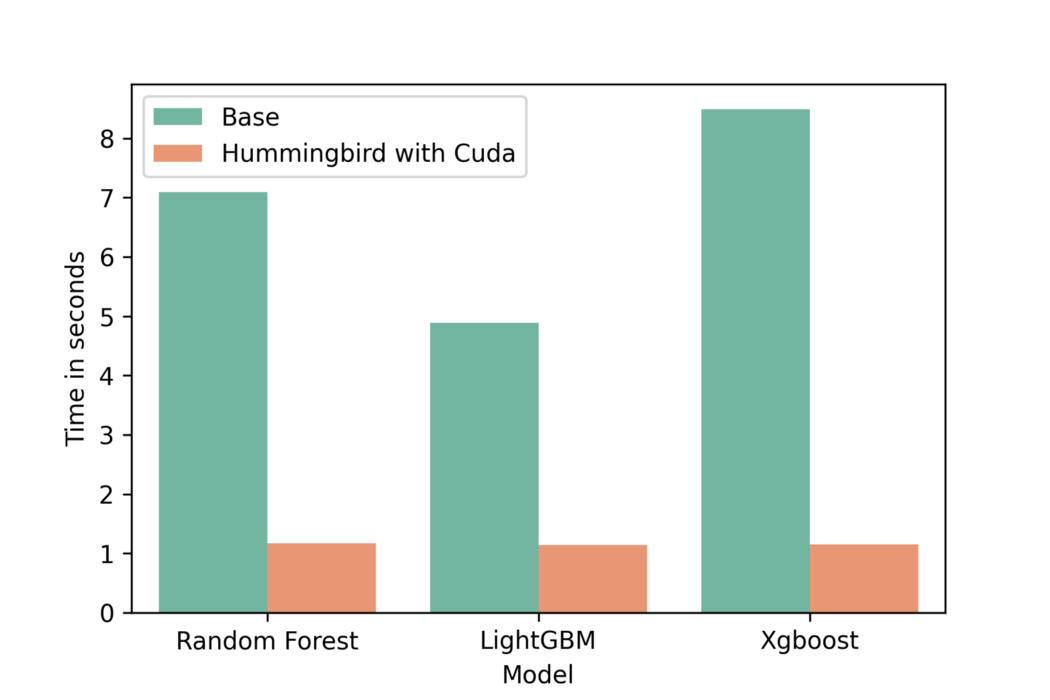

I wanted to see for myself how the results would look like on a Google Colaboratory server with a GPU enabled. Thus, I performed a quick, and by no means, scientific, experiment on Google Colaboratory which yielded the following results:

We can clearly see a large speedup in the inference on new data when using a single dataset.

2. Usage

Hummingbird is

Using the package is, fortunately, exceedingly simple. It is clear that the authors have spent significant time making sure the package can be used intuitively and across many models.

For now, we start by installing the package through pip:

pip install hummingbird-ml

If you would like to also install the LightGBM and XGboost dependencies:

pip install hummingbird-ml[extra]

Then, we simply start by creating our Sklearn model and training it on the data:

from sklearn import datasets

from sklearn.ensemble import RandomForestClassifier

iris = datasets.load_iris()

X, y = iris.data, iris.target

rf_model = RandomForestClassifier().fit(X, y)

After doing so, the only thing we actually have to do to transform it to Pytorch is to import Hummingbird and use the convert function:

from hummingbird.ml import convert

# First we convert the model to pytroch

model = convert(rf_model, 'pytorch')

# To run predictions on GPU we have to move it to cuda

model.to('cuda')

model.predict(X)

The resulting model is simply a torch.nn.Module and can then be exactly used as you would normally work with Pytorch.

It was the ease of transforming to Pytorch that first grabbed my attention to this package. Just a few lines and you have transformed your model!

3. Roadmap

Currently, the following models are implemented:

-

Most Scikit-learn models (e.g., Decision Tree, Regression, and SVC)

-

LightGBM (Classifier and Regressor)

-

XGboost (Classifier and Regressor)

-

ONNX.ML (TreeEnsembleClassifier and TreeEnsembleRegressor)

Although some models are still missing, their roadmap indicates that they are on their way:

-

Feature selectors (e.g., VarianceThreshold)

-

Matrix Decomposition (e.g., PCA)

-

Feature Pre-processing (e.g., MinMaxScaler, OneHotEncoder, etc.)

You can find the full roadmap of Hummingbird here.